Data Analytics

A Detailed Guide on Database Schemas, Types and Benefits

A Detailed Guide on Database Schemas, Types and Benefits

When organisations collect, store and analyse large volumes of data, the structure underpinning that data becomes critical. Research finds that 64% of companies identify data quality as their top data-integrity challenge, and 67% say they don’t fully trust their data when making decisions. A clearly defined database schema helps mitigate those issues by ensuring that tables, relationships and constraints are established in an orderly and accessible manner.

In this article we’ll explore what a comprehensive database schema comprises - its key components, types and styles, and its key benefits. We’ll also examine common pitfalls and learn best practices for charting an effective structure. And finally, we’ll see how Lumi can connect directly to existing relational structures and intelligently uncover how different data entities interact.

What is a Database Schema?

A database schema is the logical framework that defines how data is structured, stored, and related within a database. It outlines the organization of tables, fields, relationships, and constraints that ensure information remains consistent and accessible.

For instance, in a supply chain system, the database schema might include tables such as Suppliers, Shipments, Warehouses, Inventory, and Orders. These tables are linked through shared keys; for example, Shipments referencing Suppliers and Warehouses, allowing the organization to trace goods from procurement to delivery with accuracy and transparency.

Components of a Database Schema

Table

A table is the core structure within a database schema that stores data about a specific entity, such as products, customers, or orders, in rows and columns. Each row represents an individual record, while columns define the attributes of that entity, enabling efficient organization and retrieval of related information.

Field

A field (or column) defines a single attribute within a table, specifying the type of information each record holds, for instance, a Customer_Name or Order_Date. Fields determine the granularity of stored data and serve as the basis for indexing, filtering, and establishing relationships between tables.

Data type

A data type dictates the kind of values that a field can store, such as integers, decimals, strings, dates, or boolean values. Choosing the right data type ensures consistency, optimizes storage efficiency, and enhances performance during data processing and validation.

Constraint

A constraint enforces rules on the data to maintain integrity and accuracy across the schema, preventing invalid or inconsistent entries. Common examples include PRIMARY KEY for unique identification, to make sure each row in the table has a unique identifier.

Relationship

A relationship defines how data in one table connects to data in another, ensuring logical consistency across entities. Through one-to-one, one-to-many, or many-to-many associations, which are often implemented via foreign keys, relationships enable unified insights across complex data structures.

Types of Database Schemas

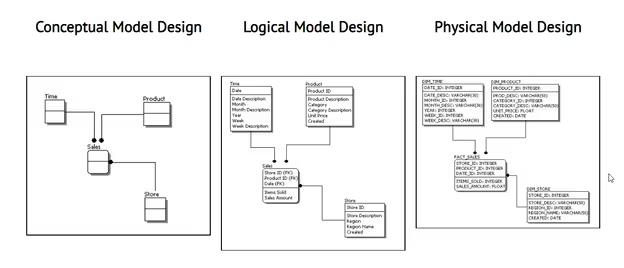

A conceptual schema defines the high level business entities and relationships independent of any technology. A logical schema translates that conceptual view into structured tables and fields, while a physical schema describes how those tables are actually stored and optimized in a specific database system.

Conceptual schema

.avif)

A conceptual schema presents a high-level view of how data is organized and connected across an organization, without reference to specific technologies or storage systems. It outlines the main entities, such as Customers, Orders, Products, and Payments, and illustrates how they relate within the business context. In a retail environment, for instance, the schema would show that each Customer can place multiple Orders, each Order contains several Products, and every Order links to one or more Payments, providing a clear picture of how information flows through the business.

This schema acts as a connecting layer between business objectives and technical database design, ensuring that analysts, engineers, and decision-makers share a common view and understanding of how data is structured and interlinked across the organization. In essence, it defines what data exists and how it relates conceptually, without specifying how or where it will be stored.

Logical schema

.avif)

A logical schema converts the high-level view of a conceptual design into a detailed structure that shows how data is logically organized within a database. It defines tables, fields, primary and foreign keys, and relationships, but stays independent of any specific database software or hardware. For example, it may specify that Customer_ID is the primary key in the Customers table and connects to Orders through a foreign key, outlining how data points relate without addressing where they’re stored.

In practice, this schema acts as the technical blueprint that guides database creation, bridging the gap between conceptual understanding and physical execution. By outlining entities, attributes, and constraints in technical but platform-neutral terms, the logical schema ensures consistency, supports normalization, and prepares the structure for efficient deployment on any chosen DBMS.

Physical schema

.avif)

A physical schema describes how data is stored, accessed, and managed within a specific database system. It turns the logical design into practical details like how tables are saved on disk, how indexes speed up queries, how data is partitioned for efficiency, and how storage is allocated for performance and security. For example, it may define that the Orders table is stored on high-speed drives, indexed by Order_ID for faster searches, and replicated across multiple servers to ensure reliability and uptime.

This schema ensures that the database operates efficiently in real-world conditions by aligning data structures with hardware capabilities and workload demands. The physical schema directly influences query speed, storage utilization, and system reliability, making it essential for maintaining high performance and data availability at scale.

Of these three, the logical schema is the most commonly used type in practice. It serves as the working model for most database design and management tasks because it defines the structure, such as tables, fields, keys, and relationships that database developers and analysts interact with daily. While the conceptual schema guides planning and the physical schema governs implementation, the logical schema is the active layer that aligns both functions, forming the foundation for querying, optimization, and maintenance across most business and analytics systems.

Common Styles of Database Schemas

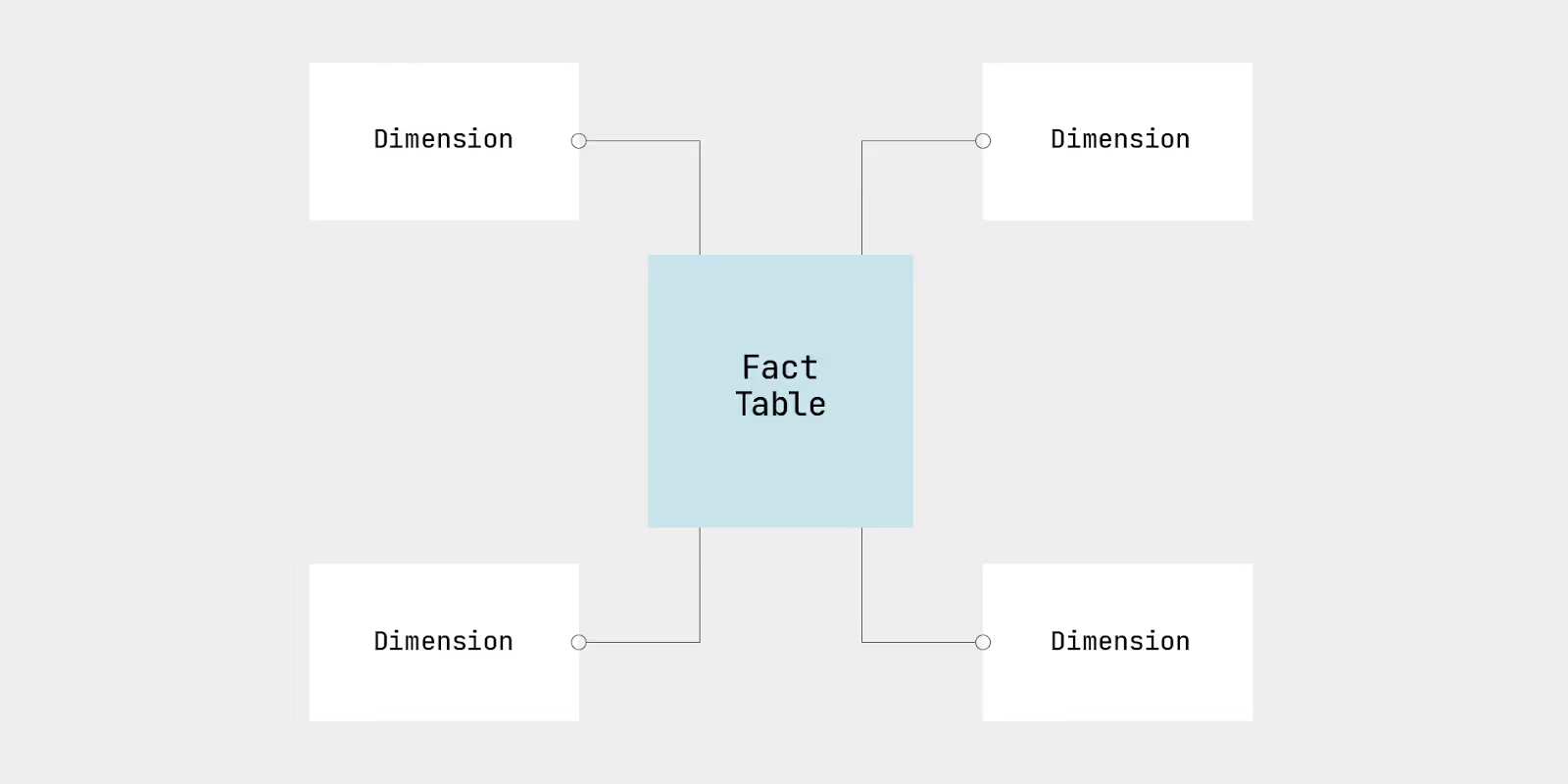

Star schema

A star schema is a common data modeling structure used in analytics and data warehousing to organize data into fact and dimension tables. The central fact table contains measurable business data such as shipment volumes, inventory levels, or order fulfillment metrics, while the surrounding dimension tables hold descriptive attributes like suppliers, warehouses, routes, and time periods. This structure simplifies queries and improves performance by creating a clear, intuitive layout that supports fast data retrieval and easy analysis.

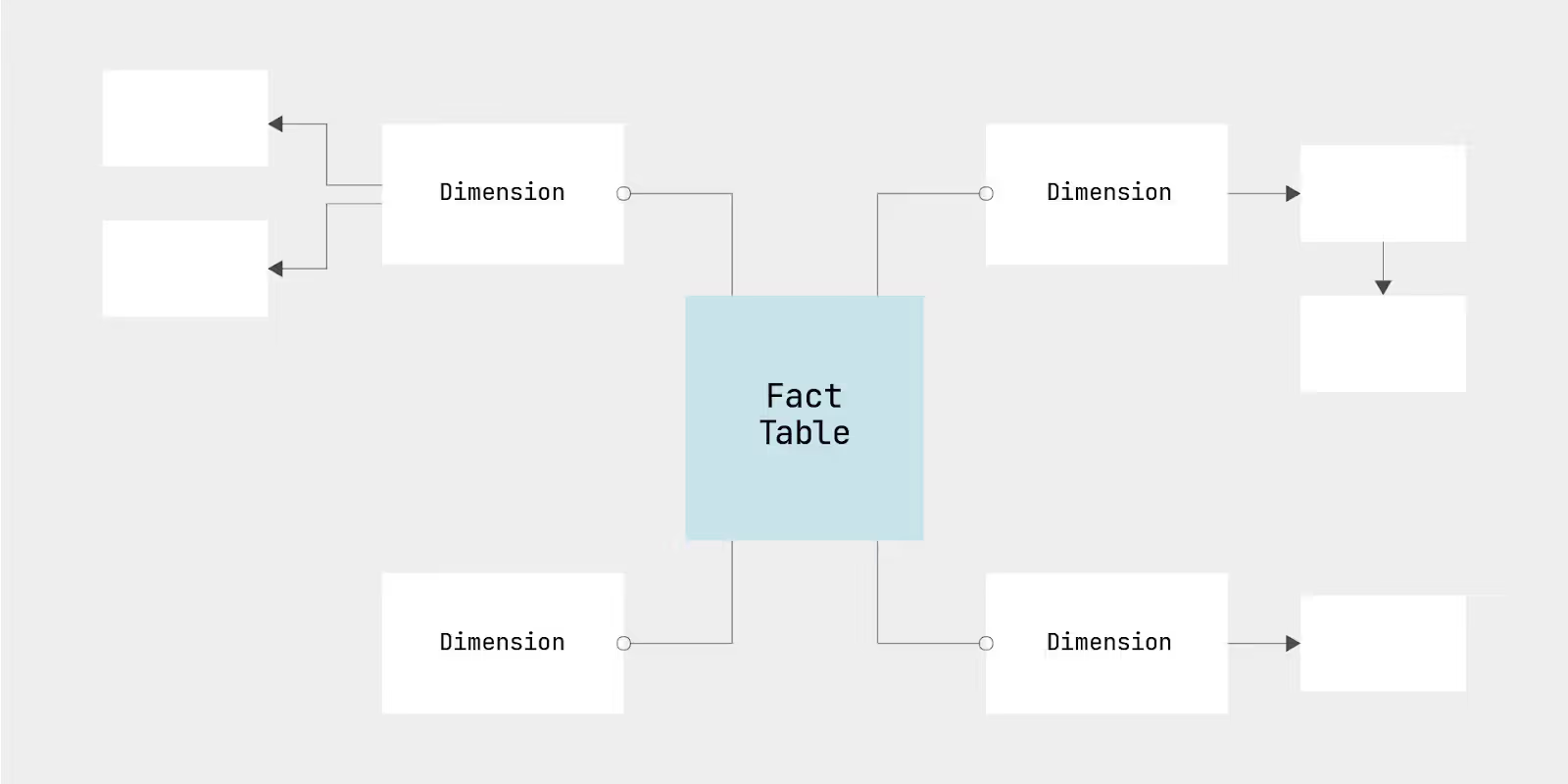

Snowflake schema

A snowflake schema expands on the star schema and normalizes dimension tables into multiple related sub-tables, producing a more granular and organized data model. In supply chain analytics, for instance, a product dimension could be divided into separate tables for product categories, suppliers, and regions, which enables cleaner relationships and more consistent updates across large datasets. While this design enhances data integrity and reduces redundancy, it often requires more complex joins during query execution, which can slightly impact performance compared to the simpler star schema.

Benefits of a Database Schema

Data organization

A well-designed schema groups related data into meaningful tables and relationships, such as linking suppliers, inventory, and shipments in a supply chain system, so users can navigate complex datasets without confusion. This structured approach enhances data integrity and ensures that business processes and analyses are built on a clear, reliable foundation.

Scalability and maintenance

A database schema provides the structure needed for systems to scale and adapt as business requirements change without affecting performance or data reliability. It enables new entities, such as suppliers, warehouses, or product categories, to be added without disrupting existing frameworks. This structure also simplifies maintenance activities like updates, indexing, and backups, keeping the database consistent and efficient over time.

Data access and security

A database schema establishes clear boundaries for how data is accessed, shared, and protected within an organization. Through defined roles, permissions, and relationships, it enforces security measures while allowing authorized users to work efficiently with the data they need. This framework helps protect sensitive assets such as supplier records, customer details, and financial information from unauthorized access or misuse.

Integrity

A database schema upholds the consistency and reliability of information across all tables and relationships. It enforces rules such as primary and foreign key constraints to prevent duplication, missing links, or contradictory data. This integrity ensures that every transaction, from supplier updates to order processing, reflects reliable and verifiable information across the organization.

Challenges of Database Schema

Performance optimization

As databases grow in size and complexity, maintaining fast query responses and efficient data retrieval becomes increasingly difficult. Without proper indexing, query tuning, or resource management, performance can degrade, slowing down analytics and daily operations.

To maintain optimal performance, teams should design indexes carefully, streamline query logic, and monitor key performance metrics. Implementing caching, table partitioning, and periodic optimization reviews can also help sustain speed and efficiency as workloads expand.

Data consistency

This becomes a challenge when multiple systems or users update the same data without proper synchronization. Inconsistent entries, like mismatched supplier IDs or differing product details across databases, can lead to reporting errors, process delays, and poor decision-making. This issue often arises from schema changes, data duplication, or a lack of unified validation rules.

Schema scalability

When a database grows beyond its initial design, the schema can struggle to accommodate new data sources, entities, or higher query loads. This often leads to slower performance, integration challenges, and costly redesigns as systems evolve.

To keep the schema scalable, teams should adopt modular designs, apply normalization and indexing strategically, and use partitioning to manage large datasets efficiently. Routine performance tuning and schema reviews further ensure that the database can expand alongside business growth.

Normalization issues

Normalization issues arise when database tables are either over-normalized or under-normalized, leading to inefficiencies in data management. Over-normalization can cause excessive joins that slow down queries, while under-normalization results in data duplication and inconsistencies. Both extremes make maintaining and scaling the database more difficult as data volume and relationships grow.

Best Practices to Follow When Designing a Database Schema

Normalize the database

Normalization ensures that data is logically structured, eliminating redundancy and improving consistency across tables. By dividing data into smaller, related tables and linking them through keys, teams can reduce duplication and simplify updates. This practice also enhances query performance and supports long-term scalability by keeping the schema organized and easier to maintain. However, normalization should be balanced with performance needs, avoiding excessive table fragmentation that can slow down complex queries.

Define relationships and constraints

Establishing clear relationships and constraints enforces data integrity and ensures accuracy across interconnected tables. Defining primary and foreign keys helps maintain valid links between entities, like suppliers and purchase orders, while constraints like NOT NULL, UNIQUE, and CHECK prevent invalid or inconsistent entries. These rules not only protect the quality of stored data but also streamline data retrieval and prevent costly errors in downstream analytics and reporting.

Use consistent naming conventions and data types

Consistency in naming and data types improves readability, maintainability, and collaboration among database users. Using standardized prefixes, clear table names, and uniform field naming (e.g., Customer_ID, Order_Date) helps developers and analysts quickly understand the schema’s structure. Likewise, aligning data types across related fields, such as ensuring all date columns follow the same format, prevents compatibility issues and reduces confusion during queries, integrations, and schema updates.

Get the Most out of Your Database Schema Design with Lumi

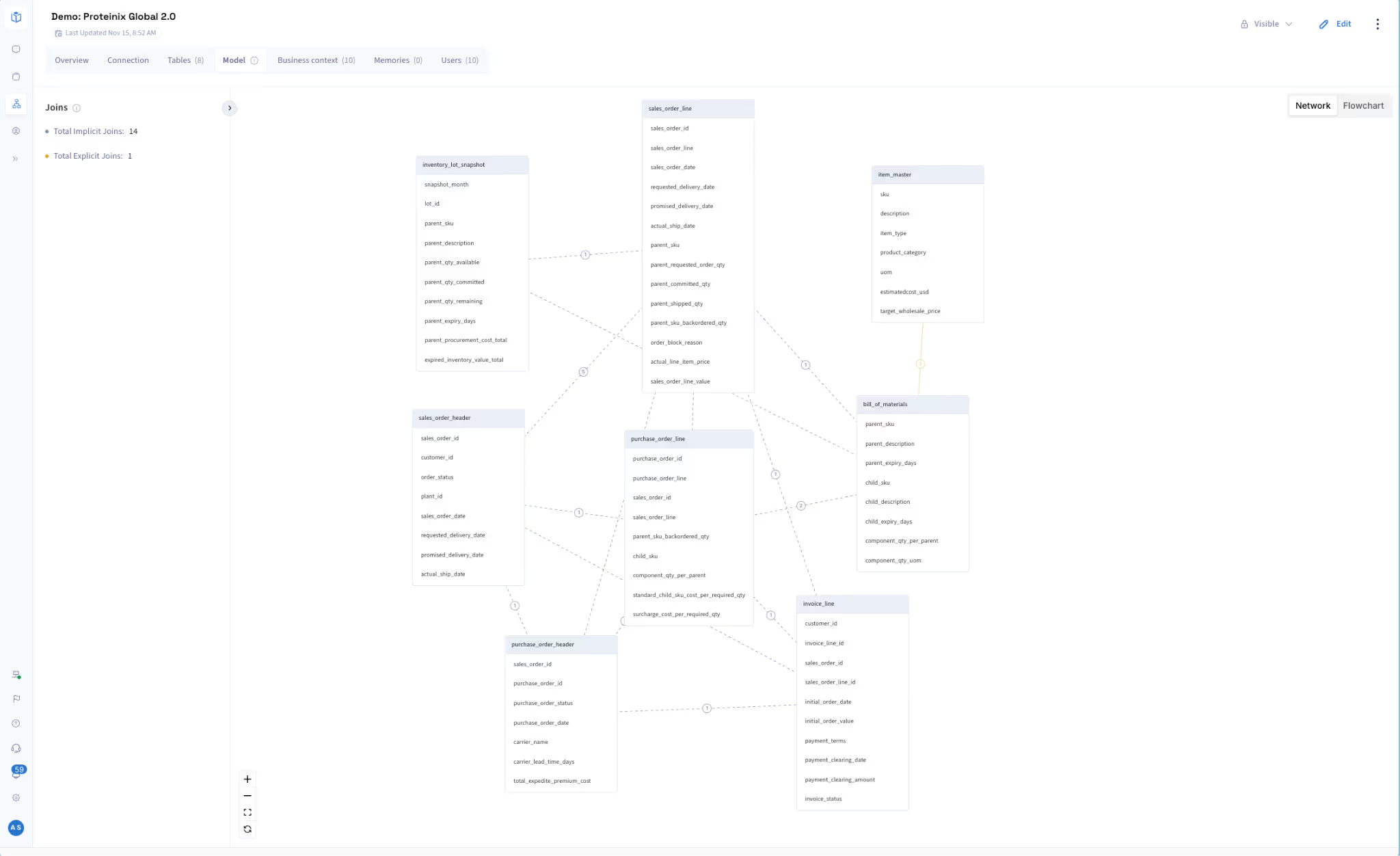

One of the key purposes of good database design is creating a reporting layer that supports business intelligence across large organizations. Traditional BI platforms like Power BI, Tableau, and Looker work with fixed data models, typically star or snowflake schemas built specifically for reporting. These tools need explicitly defined relationships between fact and dimension tables, pre-configured metrics from data engineers, and materialized views for complex calculations. This creates a significant limitation, as users can only answer questions that were anticipated when the model was built. When a new business question requires different join paths or deeper analysis, someone has to go back and rebuild the dataset or reconfigure the model.

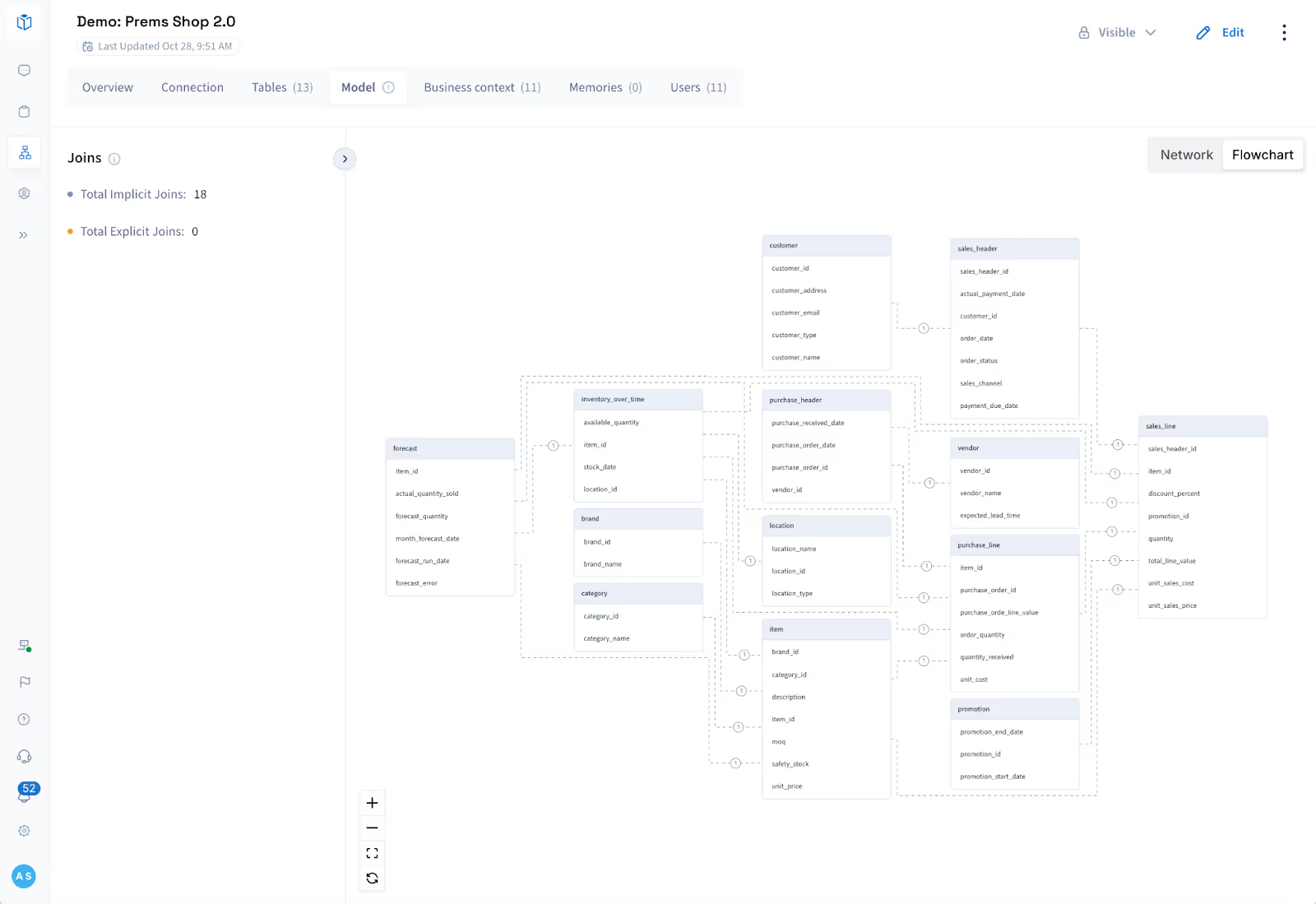

Lumi works differently by generating SQL queries on the fly. It supports recursive CTEs and looping logic, which means it can navigate complex relationships that are impractical to pre-model. Because Lumi works directly with your existing database schema, it pulls relationships automatically, builds joins based on what you're trying to analyze, and handles normalized and semi-structured data without needing ETL processes to reshape everything first.

This makes Lumi especially useful for organizations with complex data environments where relationships between entities change frequently, operational teams that need real-time insights across multiple systems, and analysts doing exploratory work like root-cause analysis or what-if scenarios that require following connections through the data. Traditional BI tools are excellent at answering predefined questions quickly; Lumi helps you find answers to questions you didn't know to ask when you first designed your reporting layer, because it can reason through your data relationships in real time.

Wondering how dynamic reporting can transform your analytics workflow? Book a Lumi demo today to see it in action.

FAQs

Q1. What is the difference between star schema and snowflake schema?

A star schema has a central fact table connected directly to dimension tables, creating a simple and easy-to-query structure. In contrast, a snowflake schema normalizes these dimension tables into multiple related sub-tables, reducing data redundancy but adding complexity. The star schema favours query performance and simplicity, while the snowflake schema prioritizes storage efficiency and data integrity.

Q2. What is the difference between database schema and database instance?

A database schema defines the structure of a database, its tables, relationships, constraints, and data types, serving as the blueprint for how data is organized. A database instance, on the other hand, refers to the actual data stored and managed within that structure at a given time. In short, the schema is the design, while the instance is the living version of that design in action.

Related articles

Experience the Future of Data & Analytics Today

Make Better, Faster Decisions.