Back to blog

Back to Case Studies

How to Effectively Automate Data Analytics using Generative AI.

The need for advanced tools to manage and analyze data efficiently is critical in today's environment. This article focuses on generative AI, AI agents and how Retrieval Augmented Generation (RAG) can be applied to automate SQL queries, offering a practical solution for streamlining responses to a wide variety of simple to complex data and analysis requests.

What is Generative AI in Data Analytics?

Generative AI in data analytics refers to the application of large language models (LLMs) and intelligent agents to analyze, interpret, and generate insights from complex datasets. LLMs, such as OpenAI’s GPT, use advanced natural language processing (NLP) to generate human-like text, interpret queries, and deliver nuanced insights. When combined with agents—autonomous AI systems designed to perform tasks—generative AI takes data analytics to a new level by automating complex processes and enabling dynamic interactions with data.

Key Benefits of Generative AI in Data Analytics

Context Incorporation and Workflow Automation

Generative AI, powered by large language models (LLMs) and intelligent agents, can be given business context surrounding: internal metrics, data schemas, field names, data types and more to be able to generate highly accurate contextual code, or queries for data analytics. The combination context and agents’ task automation accelerates workflows and reduces manual effort.

Improved Accuracy and Anomaly Detection

Multi-agent architectures improves reliability by using intelligent agents to identify inconsistencies, outliers, and potential errors. These systems validate datasets, enhance data quality, and flag irregularities before they impact decision-making.

Democratization of Data Access

With tools powered by NLP, even non-technical users can interact with complex datasets through simple queries like, “What are the sales trends for Q4?” This makes advanced analytics accessible to all levels of an organization.

Automated Insights and Visualization

Generative AI streamlines reporting by creating detailed reports and interactive dashboards with minimal user input. Agents powered by LLMs can aggregate data, generate narratives, and design visualizations that are easy to interpret, saving time and improving clarity.

The Role of SQL in Analytics

In the digital era, data analytics has become indispensable for shaping business strategies and optimizing operational efficiencies. At the core of this data-driven decision-making process lies SQL (Structured Query Language), the cornerstone for interacting with and extracting valuable insights from databases. However, the complexity and often cumbersome nature of databases, filled with vast, vague, and duplicated fields, traditionally necessitate a high level of technical expertise to navigate and utilize effectively.

The task of manually crafting SQL queries, while precise, poses a bottleneck in the analytics workflow, particularly as the volume and complexity of data requests expand. Automating SQL generation emerges as a transformative solution, promising a substantial leap in productivity by simplifying access to analytics for a wider range of users and expediting the insight generation process. This automation not only democratizes data analytics by bridging the gap between technical expertise and strategic decision-making but also frees up valuable time for analysts to concentrate on deeper analytical tasks, heralding a new age of efficiency and accessibility in data intelligence.

Large Language Models (LLMs) Alone are Incapable of Big Data Analytics

The evolutionary trajectory of Large Language Models (LLMs) signifies a drastic shift towards the potential for automating data analytics. This transition is transforming our engagement with vast data repositories, enabling the extraction of meaningful insights on an unprecedented scale. It marks a pivotal moment in making high-quality decision-making accessible across all levels of an organization, thereby inaugurating a new epoch of business intelligence.

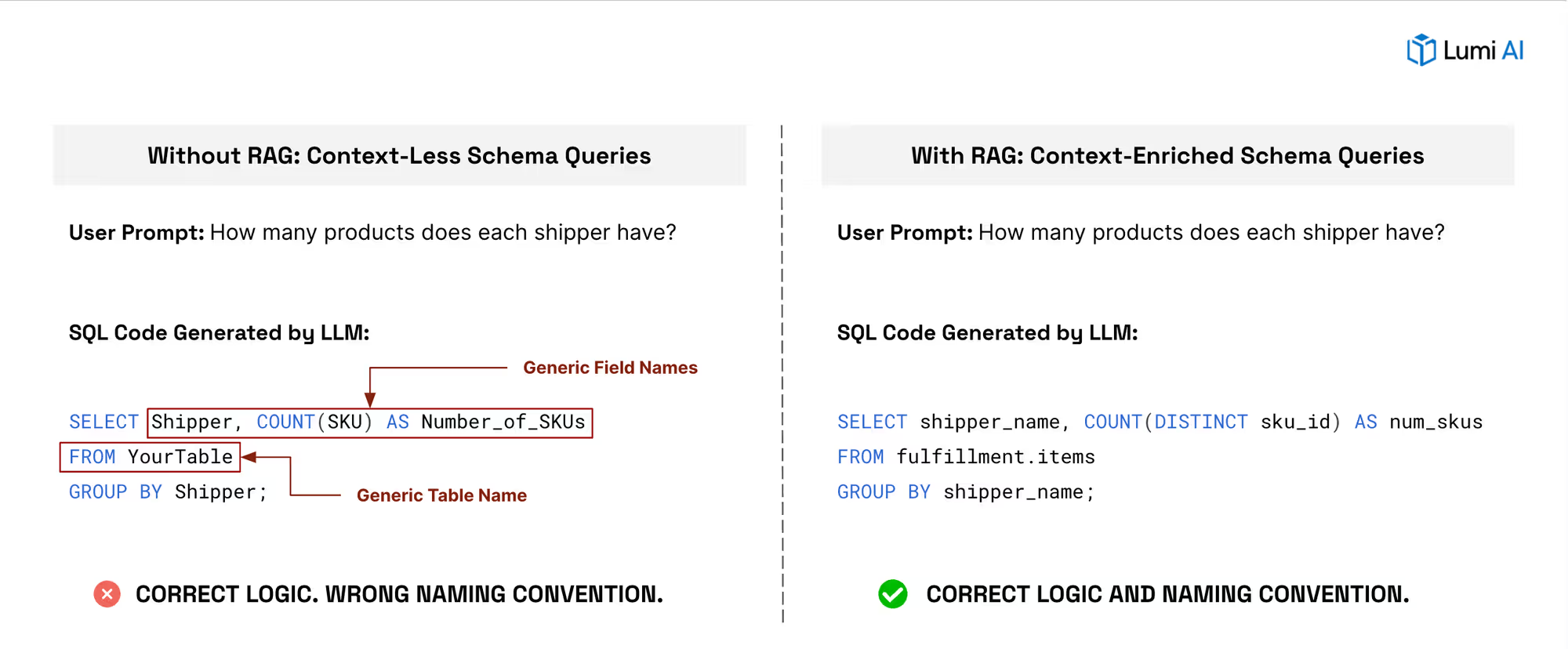

Despite their remarkable capabilities in natural language processing and generation, LLMs encounter significant limitations when applied to the realm of big data analytics, especially without a nuanced understanding of the data environment. Their adeptness at coding and processing linguistic constructs does not inherently equip them with the knowledge of specific SQL variants required for querying databases or the intricacies of complex data models, such as join conditions and relational database schemas. These limitations highlight a gap between the linguistic prowess of LLMs and the technical specificity required for effective data analytics. For more information about semantic layers that can address some of these issues read Semantic Layers and Data Democratization.

The essence of SQL in data analytics lies in its ability to facilitate direct interaction with databases, allowing for the retrieval, manipulation, and analysis of data. However, the application of LLMs to generate SQL queries autonomously is constrained by their lack of contextual awareness regarding the data landscape. Without an intimate knowledge of how data is structured, stored, and interrelated within databases, LLMs are unable to formulate the precise queries needed to extract relevant insights. This challenge underscores the necessity for a bridge that connects the advanced linguistic capabilities of LLMs with the technical demands of SQL-based data analytics.

Enter Retrieval Augmented Generation (RAG): The Key to Automate SQL

The leap forward with the introduction of LLMs was transformative, but the true game-changer in this landscape is the advent of Retrieval Augmented Generation (RAG).

RAG is not just an incremental improvement; it's a paradigm shift that addresses a critical gap in the capabilities of LLMs when applied to the domain of big data analytics, particularly in the generation of SQL queries for data retrieval and manipulation.

What is Retrieval Augmented Generation (RAG)?

Retrieval augmented generation (RAG) is an architecture that provides the most relevant and contextually-important information to large language models (LLMs) when it is performing tasks. This capability significantly boosts the accuracy and reliability of their responses by allowing these models to "consult" externally sourced, verified information, much like referring to a book for answers instead of relying solely on memory.

The core principle behind RAG is simple yet profound: High Accuracy Output = Greater Trust in Model. This foundational trust is crucial, not just for enhancing operational efficiency but also for building confidence in AI-driven decision-making processes.

Using Retrieval Augmented Generation (RAG)

Identifying the need to supply context to LLMs leads to a few questions of what context should be provided, where should it be stored, and how it can be dynamically fed to the models?

What Context should be Provided?

The automation of SQL generation through Retrieval Augmented Generation (RAG) hinges on the provision of precise and relevant context to Large Language Models (LLMs). Initially, this involves supplying fundamental data such as table names and field names, which are crucial for the syntactical accuracy of SQL queries. Yet, to truly harness the potential of LLMs in generating effective SQL, the scope of context needs expansion beyond these basics.

Incorporating detailed insights about join conditions, table-specific nuances, and specialized business terminology markedly improves the LLMs' ability to craft not only accurate but logically sound and actionable SQL queries. This enriched context empowers LLMs to understand and navigate the complex web of data relationships, enabling more advanced querying capabilities that can unlock deeper insights from the data.

Examples of Business terminology:

Raw Materials are items where the item category code = 'RM'. Active merchants are those who have placed 1 order in past 12 monthsNavigating the complexities of databases—often vast, ambiguous, and fraught with duplications—demands a strategic approach to the contextual information provided to LLMs. Overloading models with too much context can muddle their processing abilities, leading to suboptimal query generation. Conversely, too little context might leave LLMs groping in the dark, unable to formulate meaningful queries. Thus, achieving the right contextual balance is imperative, necessitating ongoing adjustments to finely tune the information fed to LLMs.

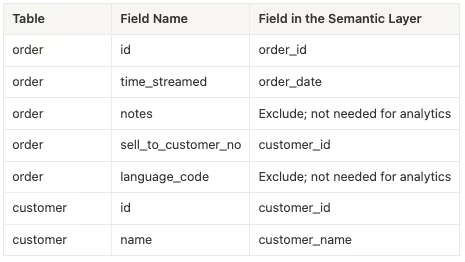

Central to achieving this balance is the development of a well-defined semantic layer. This layer acts as a navigational guide for LLMs, simplifying and condensing complex data structures into formats that are both human-readable and AI-friendly. Beyond simplifying data access, a robust semantic layer enables LLMs to perform more advanced types of querying, such as predictive analyses, complex joins, and dynamic aggregations, thereby expanding the scope and depth of insights that can be derived from the data.Here are practical examples of how the semantic layer transforms raw data into a streamlined format:

This transformation isn't merely about accessibility; it equips LLMs with the insights necessary to navigate and interpret the data landscape effectively. With a well-constructed semantic layer, LLMs can advance beyond basic data retrieval to execute sophisticated querying operations, thereby significantly enhancing the analytical capabilities and business intelligence derived from big data analytics.

Where to Store the Context?

The technology landscape offers several storage options, each with its unique strengths and considerations. Vector databases stand out as a recommended choice due to their efficiency in handling dynamic, context-rich data. They excel in facilitating the rapid retrieval of context necessary for LLMs to generate accurate and relevant SQL queries, making them a prime choice for RAG applications.

However, NoSQL databases and conventional data warehouses like BigQuery also present viable options, depending on specific use cases and requirements. NoSQL databases offer flexibility in storing unstructured or semi-structured data, which can be beneficial when dealing with diverse data types and structures. On the other hand, data warehouses provide robust solutions for managing large volumes of structured data, with BigQuery offering scalable and serverless insights into data analytics.

When selecting a storage solution, it's essential to weigh factors such as latency and cost implications. Latency is critical in ensuring real-time or near-real-time access to contextual information, which can significantly impact the performance and responsiveness of the RAG system. Cost considerations are equally important, as the choice of storage solution can influence the overall cost-efficiency of deploying and maintaining the RAG system.

Ultimately, the decision on where to store the context should align with the organization's data strategy, taking into account the specific requirements for accessibility, scalability, and performance. By carefully selecting the appropriate storage solution, organizations can effectively support the dynamic retrieval of context, enabling LLMs to generate more accurate and insightful SQL queries through the power of RAG.

How to Dynamically Retrieve Context?

- Transform Queries and Context into Embeddings. Retrieving the most relevant information to accurately respond to user prompts starts by vectorizing the user's prompt alongside the database's contextual data into embeddings. This technique essentially translates natural language into a mathematical representation, laying the groundwork for identifying semantic similarities between the query and the stored information.

- Match Query Embeddings with Database Context. Once vectorized, the next step involves comparing the query's embedding against those derived from the database context. This comparison, predominantly done through cosine similarity due to its effectiveness in identifying semantic nuances, calculates similarity scores. The objective is to pinpoint the context embeddings that best match the query, ensuring that the model's response is as relevant and accurate as possible.

- Select the Right Context. The art of selecting the right context is crucial. It involves choosing context data that is closely aligned with the query, based on the k-nearest embedding vectors. This careful selection process ensures that the model's generated responses are not just syntactically precise but also semantically reflective of the user's intent. The aim is to enrich the model's understanding, guiding it to produce SQL queries that delve directly into the heart of the user's request.

To further refine the model's focus, each user prompt is usually augmented with a set of tailored instructions. These instructions are crafted to direct the model towards specific, customized tasks, effectively serving as guardrails to keep the model on track and mitigate the risk of generating irrelevant or off-target responses (‘hallucinations’). For instance:

This approach not only streamlines the model's ability to generate relevant SQL queries but also ensures the outputs are intricately tailored to the nuances of the user's request, significantly enhancing the effectiveness and reliability of automated SQL generation through Retrieval Augmented Generation (RAG). This methodology underscores the potential of RAG in revolutionizing SQL query generation, making sophisticated data analysis more accessible and efficient.

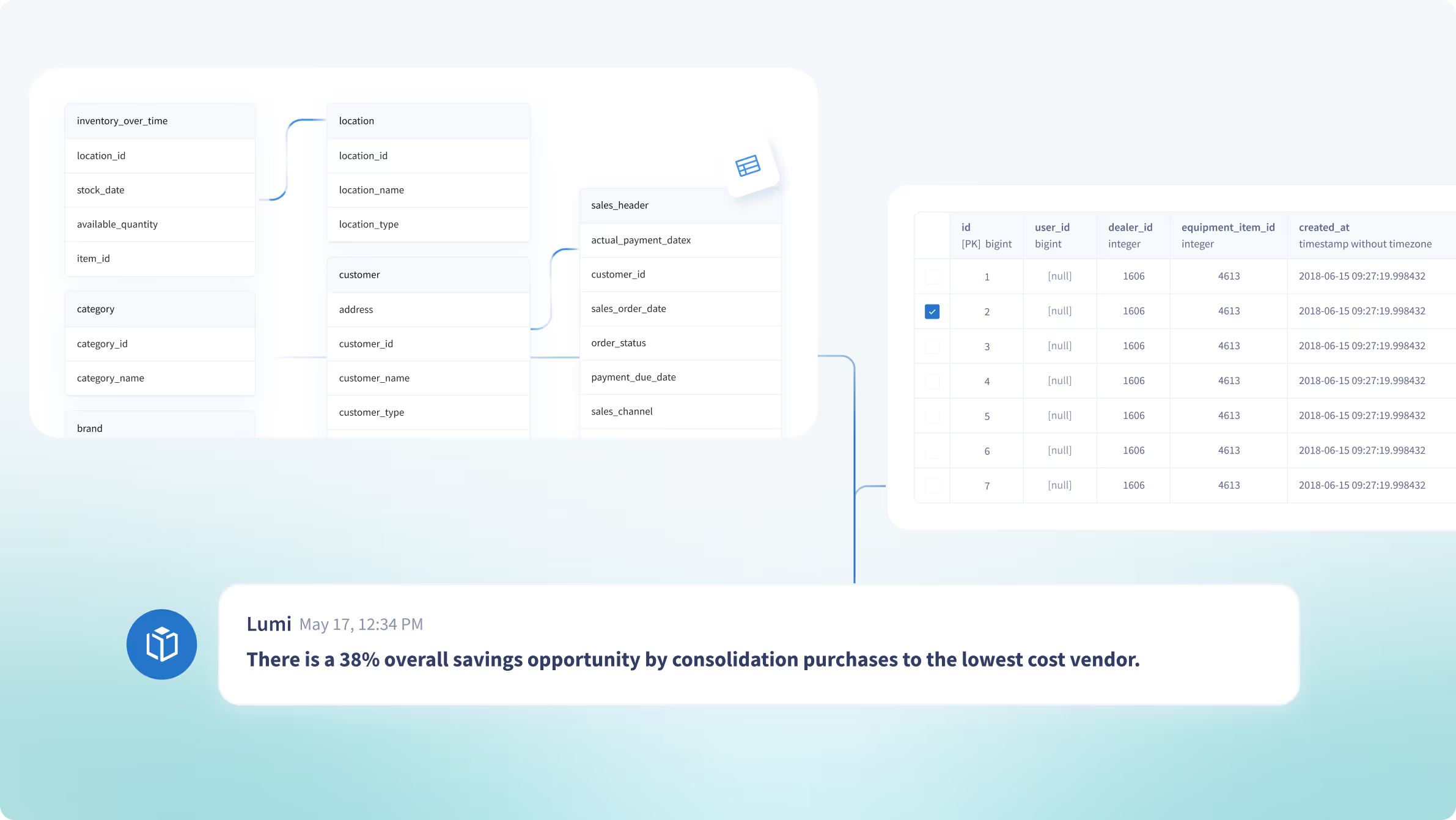

An Alternative to Building: Introducing Lumi AI's Knowledge Base

Constructing an in-house system that aligns Large Language Models (LLMs) with the specific needs of your business for automated data request responses can be intricate and prohibitively expensive.

Lumi AI offers a cost-effective and rapidly deployable solution as an alternative to the demanding process of in-house development. Its intuitive Knowledge Base interface enables administrators to seamlessly introduce Lumi to the specific data tables, fields, KPI calculations, and business terminology pertinent to your operations, significantly streamlining the AI integration process.

Central to the efficacy of Lumi AI is its Knowledge Base, enriched with domain expertise in critical areas such as CPG, Retail, and Supply Chain, and continuously expanding. The platform's readiness to deliver from the moment of deployment is assured by the diverse and experienced contributions from data scientists and industry experts, ensuring Lumi AI's offerings are precisely calibrated to your business's intricacies.

Lumi AI demonstrates the effective application of Retrieval Augmented Generation (RAG) to overcome common industry hurdles, merging standard integrations with a symphony of AI agents to furnish consistent, accurate, and actionable insights. This establishes Lumi AI as an indispensable tool for the forthcoming era of AI-assisted data analysis.

Discover how Lumi AI can elevate your data analytics and decision-making by signing up. Start your path to accessing AI-generated actionable insights with unparalleled ease today. Book a demo.

Make Better, Faster Decisions.

.avif)

.avif)

.avif)